How to share data in the Flanders Smart Data Space

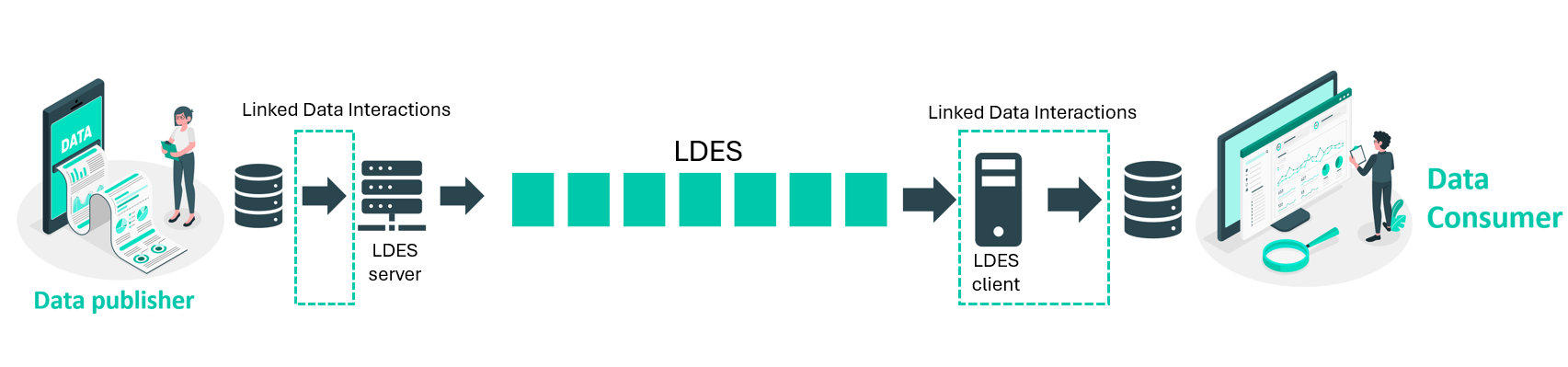

This technical documentation is here to guide you through the pipeline architecture that needs to be set up to share and collect data within the Flanders Smart Data Space. The Flanders Smart Data Space allows you to publish and consume both open and closed data efficiently. For this purpose, a technical standard (Linked Data Event Streams) has been chosen that allows data users to be kept in sync with different dynamic source data sets. It allows data to be exchanged between silos sustainably and cost-effectively using domain-specific ontology for fast and slowly changing data streams.

Before exploring how to set up data pipelines, make sure to familiarize yourself with the foundational concepts. This includes understanding what the Flanders Smart Data Space is all about, grasping the Linked Data principle, and knowing what a Linked Data Event Stream (LDES) typically entails.

Set up your Pipeline, and you are good to go

To either publish your data with or access data from the Flanders Smart Data Space, setting up a pipeline is essential. You can kickstart this process instantly using open-source Java components that are ready to run in a docker container.

Are you a data publisher that wants to publish your data in the Flanders Smart Data Space as a Linked Data Event Stream (LDES)? We explain what the publishing pipeline should look like, without going too deeply into the specific configuration of the necessary building blocks. We will assist you in selecting the right open-source building blocks that fit your specific needs, focusing on ease of integration. After this, you can go directly to the tutorials, with the basic concepts and background knowledge in your backpocket.

Are you a data consumer eager to delve into your first LDES experience? Setting up a dedicated pipeline is once again essential. This round requires establishing a specialized consumption pipeline designed to sequentially harvest LDES members and propel them downstream. By assembling various components, you can create a customized LDES consuming pipeline tailored to your specific needs.

As a data intermediary, your objective is to collect LDES streams and then release a revamped, enriched LDES data stream. The capability to utilize data processing techniques for cleaning, restructuring, or augmenting the initial datasets requires the establishment of a specialized LDES consumption pipeline. Your role involves implementing a resilient and scalable pipeline that not only facilitates an uninterrupted data flow but also aligns with Linked Data principles, thereby transforming your enhanced LDES stream into a crucial resource within the data ecosystem.

Learn by doing

Based on a few use cases, we try to teach you in a light-hearted way how to publish or consume an LDES. In these tech docs we do not go into depth about the different possible parameters, but we show a working configuration for the proposed use case. Detailed information about the technical parameters or configuration options can be found under sections of the building blocks individually.